The Duckietown Platform

✎Modified 2021-10-31 by tanij

The Duckietown platform has many components.

This section focuses on the physical platform used for the embodied robotic challenges.

For examples of Duckiebot driving see a set of demo videos of Duckiebots driving in Duckietown (unknown ref opmanual_duckiebot/demos).

The actual embodied challenges will be described in more detail in LF, LFV, LFI.

the sequence of the challenges was chosen to gradually increase the difficulty, by extending previous challenge solutions to more general situations. We recommend you tackle the challenges in this same order.

The Duckietown Platform

✎Modified 2021-10-31 by tanij

There are three main parts of the platform with which you will interact:

-

Simulation and training environment, which allows testing in simulation before trying on the real robots.

-

Duckietown Autolabs in which to try the code in controlled and reproducible conditions.

-

Physical Duckietown platform: miniature autonomous vehicles and smart-cities in which the vehicles drive. The Duckiebots (unknown ref opmanual_duckiebot/duckiebot-configurations)

(robot hardware) and Duckietown (environment) are rigorously specified (unknown ref opmanual_duckietown/dt-ops-appearance-specifications)previous warning next (3 of 18) index

warningI will ignore this because it is an external link. > I do not know what is indicated by the link '#opmanual_duckiebot/duckiebot-configurations'.

Created by functionLocation not known more precisely.

n/ain modulen/a., which makes the development extremely repeatable. If you have a Duckiebot you can refer to the Duckiebot operational manual (unknown ref opmanual_duckiebot/book)previous warning next (4 of 18) index

warningI will ignore this because it is an external link. > I do not know what is indicated by the link '#opmanual_duckietown/dt-ops-appearance-specifications'.

Created by functionLocation not known more precisely.

n/ain modulen/a.for step-by-step instructions on how to assemble, maintain, calibrate and operate your robot. If you would like to acquire a Duckiebot please go to the Duckietown project store.previous warning next (5 of 18) index

warningI will ignore this because it is an external link. > I do not know what is indicated by the link '#opmanual_duckiebot/book'.

Created by functionLocation not known more precisely.

n/ain modulen/a.

The Duckiebots officially supported for AI-DO 6 (2021) are the DB21 Duckiebots. We recommend you build your Duckietowns according to the specifications, too. The necessary materials can be sourced locally pretty much globally - but if you want compliant “one-click” AI-DO kits for each challenge you can get them from here:

For any questions regarding Duckietown hardware you can reach out to hardware@duckietown.com.

Duckiebots and Duckietowns

✎Modified 2021-10-31 by tanij

We briefly describe the physical Duckietown platform, which comprises autonomous vehicles (Duckiebots) and a customizable model urban environment (Duckietown).

The Duckiebot

✎Modified 2021-10-31 by tanij

Duckiebots are designed with the objectives of affordability, modularity and ease of construction. They are equipped with: a front viewing camera with 160 degrees fish-eye lens capable of streaming resolution images reliably at 30 fps, and wheel encoders on the motors. DB21 Duckiebots are equipped with IMUs and front facing time of flight sensors too.

Actuation is provided through two DC motors that independently drive the front wheels (differential drive configuration), while the rear end of the Duckiebot is equipped with a passive omnidirectional wheel.

All the computation is done onboard on a:

- DB19: Raspberry Pi 3B+ computer,

- DB21: Jetson Nano 2 GB (DB21M) or Jetson Nano 4 GB (DB21J).

Power is provided by a mAh Duckiebattery (unknown ref opmanual_duckiebot/db-opmanual-preliminaries-electronics) which provides several hours of operation.

The Duckietown

✎Modified 2021-10-31 by tanij

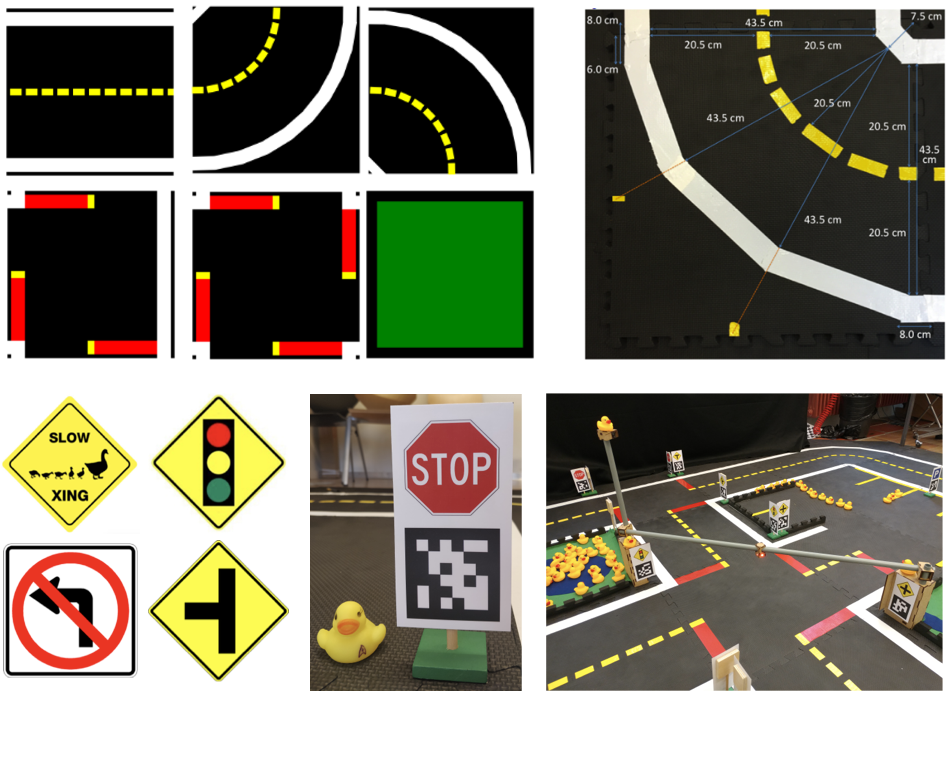

Duckietowns are modular, structured environments built on two layers: the road and the signal layers (Figure 2.2). Detailed specifications can be found here (unknown ref opmanual_duckietown/dt-ops-appearance-specifications).

There are six well-defined road segments: straight, left and right 90 deg turns, 3-way intersection, 4-way intersection, and empty tile. Each is built on individual tiles, and their interlocking enables customizable city sizes and topographies. The appearance specifications detail the color and size of the lines as well as the geometry of the roads.

The signal layer comprises street signs and traffic lights. Street signs enable global localization (knowing where they are within a predefined map) of Duckiebots in the city and interpretation of intersection topologies. They are defined as the union of an AprilTag [1] in addition to the typical road sign symbol. Their size, height and relative positioning with respect to the road are specified. Many signs are supported, including intersection type (3- or 4-way), stop signs, road names, and pedestrian crossings.

Simulation

✎Modified 2021-10-31 by tanij

We provide a cloud simulation environment for training.

In a way similar to the last DARPA Robotics Challenge, we use the simulation as a first screening of the participant’s submissions. It will be necessary for the submitted agent code to run in simulation and be sufficiently performant to gain access to the Autolabs.

Simulation environments for each of the individual challenges are provided as Docker containers with clearly specified APIs. The baseline solutions for each challenge is provided as separate containers. When both containers (the simulation and corresponding solution) are loaded and configured correctly, the simulation will effectively replace the real robot(s). A proposed solution can be uploaded to our cloud servers, at which point it will be automatically run against our pristine version of the simulation environment (on a cluster) and a score will be assigned and returned to the participant.

Examples of the simulators provided are shown on the Duckietown Challenges server. E.g., here is a LF evaluated submission example from AI-DO 5.

This simulator is also integrated with the OpenAI Gym environment for reinforcement learning agent training. An API for designing reward functions or tweaking domain randomization will be provided.

Duckietown Autolabs

✎Modified 2021-10-31 by tanij

The idea of an Autolab is inspired by Georgia Tech’s Robotarium (contraction of robot and aquarium) [2].

The use of an Autolab has two main advantages:

- Convenience: It allows convenient access to a complete robot setup.

- Reproducibility: It allows for multiple people to run the experiments in repeatable controlled conditions.

You can find detailed information on Duckietown Autolabs in our paper: Integrated Benchmarking and Design for Reproducible and Accessible Evaluation of Robotic Agents.

If you would like to cite Duckietown Autolabs, please use:

@INPROCEEDINGS{tani2020duckienet,

author={Tani, Jacopo and Daniele, Andrea F. and Bernasconi, Gianmarco and Camus, Amaury and Petrov, Aleksandar and Courchesne, Anthony and Mehta, Bhairav and Suri, Rohit and Zaluska, Tomasz and Walter, Matthew R. and Frazzoli, Emilio and Paull, Liam and Censi, Andrea},

booktitle={2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={Integrated Benchmarking and Design for Reproducible and Accessible Evaluation of Robotic Agents},

year={2020},

volume=,

number=,

pages={6229-6236},

doi={10.1109/IROS45743.2020.9341677}}

Computational substrate available

✎Modified 2021-10-31 by tanij

For the competition we will several options for computational power.

-

The “purist” computational substrate option: where processing is done onboard Duckiebots.

-

The images are streamed to a base-station with a powerful GPU. This will increase computational power but also increase the latency in the control loop.

Interface

✎Modified 2021-10-31 by tanij

Each Duckiebot has the following interface to the physical or simulated Duckietown.

Inputs:

A Duckiebot has a front-facing camera and encoders on each motor as described here.

- Thus, the Duckiebot receives images (both in simulation and physical reality) of resolution reliably at a rate of fps.

Outputs:

The Duckiebot interacts with the world through its actuators, its wheel motors.

- The output of the Duckiebot both in simulation and reality are its two motor command signals.