Part 2: Affine Transformations

✎Modified 2020-10-22 by sageshoyu

Background Information

✎Modified 2020-09-08 by sageshoyu

In order to estimate the PiDrone’s position (a 2-dimensional column vector ) using the camera, you will need to use affine transformations. An affine transformation is any transformation of the form , where and . The affine transformations we are interested in are rotation, scale, and translation in two dimensions. So, the affine transformations we will look at will map vectors in to other vectors in .

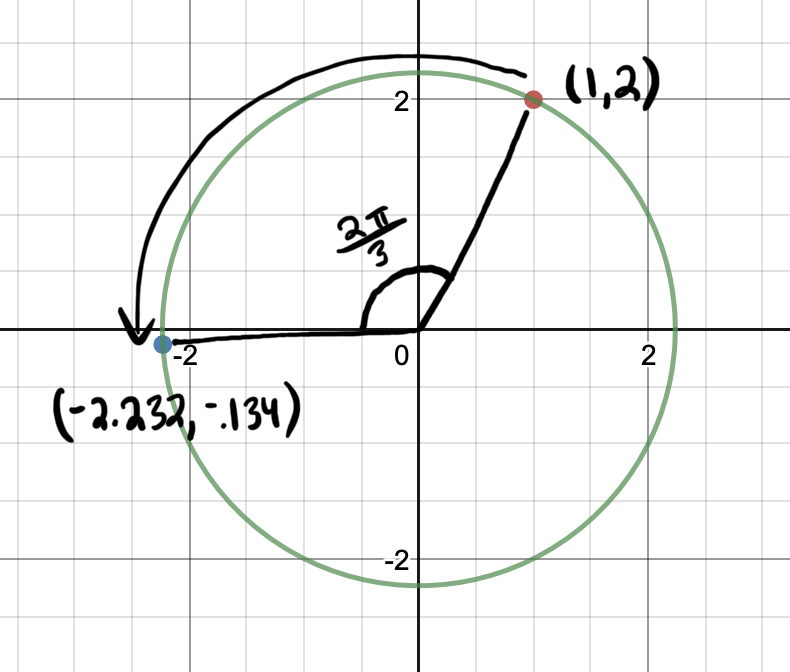

Let’s first look at rotation. We can rotate a column vector about the origin by the angle by premultiplying it by the following matrix:

Let’s look at an example. Below we have the vector . To rotate the vector , we premultiply the vector by the rotation matrix:

A graphical representation of the transformation is shown below. The vector is rotated about the origin to get the vector

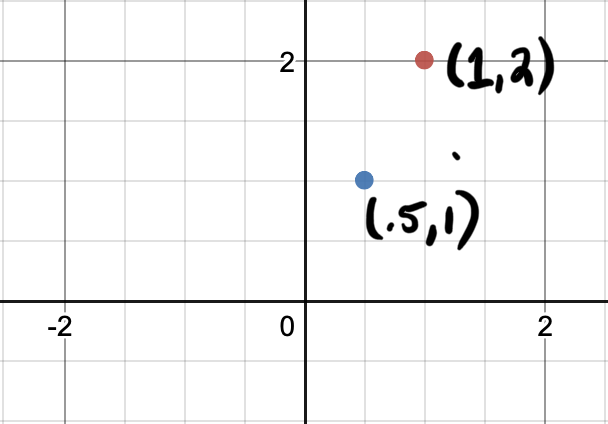

Next, let’s look at how scale is represented. We can scale a vector by a scale factor by premultiplying it by the following matrix:

We can scale a single point by a factor of .5 as shown below:

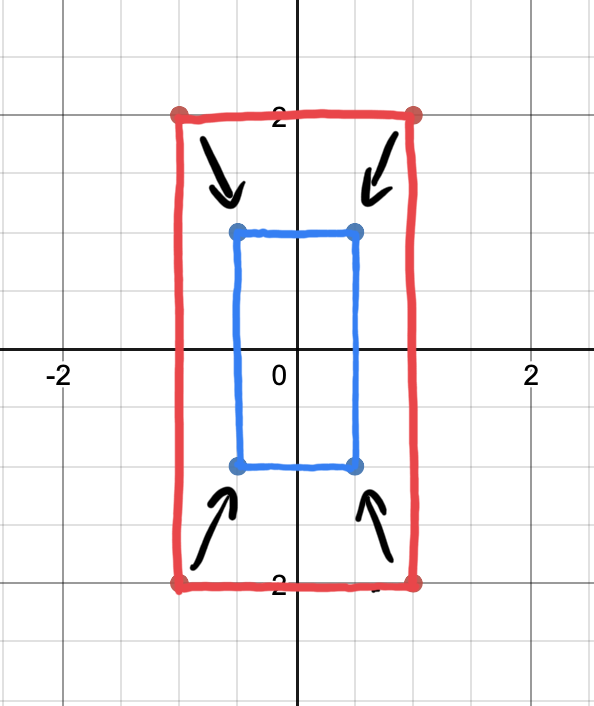

When discussing scaling, it is helpful to consider multiple vectors, rather than a single vector. Let’s look at all the points on a rectangle and multiply each of them by the scale matrix individually to see the effect of scaling by a factor of .5:

Now we can see that the rectangle was scaled by a factor of .5.

What about translation? Remember that an affine transformation is of the form . You may have noticed that rotation and scale are represented by only a matrix , with the vector effectively equal to 0. We could represent translation by simply adding a vector to our vector . However, it would be convenient if we could represent all of our transformations as matrices, and then obtain a single transformation matrix that scales, rotates, and translates a vector all at once. We could not achieve such a representation if we represent translation by adding a vector.

So how do we represent translation (moving in the direction and in the direction) with a matrix? First, we append a 1 to the end of to get . Then, we premultiply by the following matrix:

Even though we are representing our and positions with a 3-dimensional vector, we are only ever interested in the first two elements, which represent our and positions. The third element of is always equal to 1. Notice how premultiplying by this matrix adds to and to .

So this matrix is exactly what we want!

As a final note, we need to modify our scale and rotation matrices slightly in order to use them with rather than . A summary of the relevant affine transforms is below with these changes to the scale and rotation matrices.

Estimating Position on the Pidrone

✎Modified 2020-10-18 by sageshoyu

Now that we know how rotation, scale, and translation are represented as matrices, let’s look at how you will be using these matrices in the sensors project.

To estimate your drone’s position, you will be using a function from OpenCV called

esimateRigidTransform. This function takes in two images and and a boolean . The function returns a matrix estimating the affine transform that would turn the first image into the second image. The boolean indicates whether you want to estimate the affect of shearing on the image, which is another affine transform. We don’t want this, so we set to False.

estimateRigidTransform returns a matrix in the form of:

This matrix should look familiar, but it is slightly different from the matrices we have seen in this section. Let , , and be the rotation, scale, and translation matrices from the above summary box. Then, is the same as , where the bottom row of is removed. You can think of as a matrix that first scales a vector by a factor of , then rotates it by , then translates it by in the direction and in the direction, and then removes the 1 appended to the end of the vector to output .

Wow that was a lot of reading! Now on to the questions…

Questions

✎Modified 2020-10-18 by sageshoyu

-

Your PiDrone is flying over a highly textured planar surface. The PiDrone’s current position is , its current position is , and its current yaw is . Using the PiCamera, you take a picture of the highly textured planar surface with the PiDrone in this state. You move the PiDrone to a different state ( is your position, is your position, and is your yaw) and then take a picture of the highly textured planar surface using the PiCamera. You give these pictures to

esimateRigidTransformand it returns a matrix in the form shown above.Write expressions for , , and . Your answers should be in terms of , , , and the elements of . Assume that the PiDrone is initially is located at the origin and aligned with the axes of the global coordinate system.

(Hint 1: Your solution does not have to involve matrix multiplication or other matrix operations. Feel free to pick out specific elements of the matrix using normal 0-indexing, i.e. . Hint 2: Use the function arctan2 in some way to compute the yaw.)