Odometry with Wheel Encoders

✎Modified 2020-10-28 by PhiDeltaEE

Odometry Calibration for your Duckiebot

Use the Wheel Encoders to Obtain a Better Calibration for your Duckiebot.

Odometry Calibration

✎Modified 2020-10-28 by PhiDeltaEE

As seen in the previous section, the differential drive model used for the Duckiebot depends on several parameters, which can be updated for better performance. In this exercise, we will perform calibration of the Duckiebot using the wheel encoders, and hopefully obtain a more accurate model which allows for controlling the Duckiebot without the camera.

Mathematical Representation

✎Modified 2020-10-28 by PhiDeltaEE

The complete mathematical model for the Duckiebot can be found here (unknown ref duckiebot-modeling) Location not known more precisely.error

(1 of 1)

index

I do not know what is indicated by the link '#duckiebot-modeling'.

n/a in module n/a.

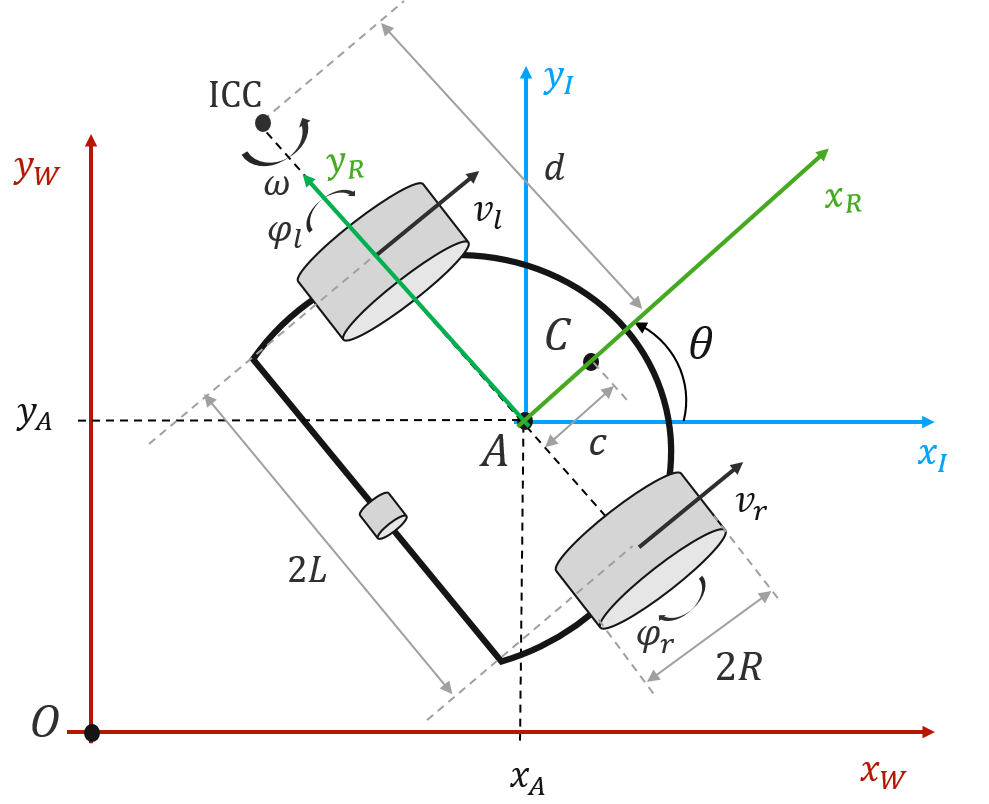

Where is the constrained kinematic model, and is the robot pose.

To obtain the calibration parameters , we can use the wheel encoders. The wheel encoders measure a tick every which is the change in angular position. From this representation, the distance travelled by each wheel (independently) can be measured with the following formula:

Thus, the kinematic model for the Duckiebot can be written in terms of the distance traveled in a given time interval , and is shown as .

The Duckiebot model is shown below:

The kinematics model (for linear and angular velocity of point A), is then written as:

Thus the model, in global coordinates, can be written as:

Where is the world displacement in the x-direction, is the y-displacement in the y direction, and is the robot’s orientation.

Calibration Methodology

✎Modified 2020-10-28 by PhiDeltaEE

From the known parameters, we can now derive a method for calibration which depends only on . The goal is to determine the parameters by mapping real-world position to some virtual coordinates (in the robot’s reference frame).

The method to do this is to follow a predetermined pattern on the ground, with known coordinates. This could be a series of lines on the floor, or the Duckietown which you have at home or at the lab. The robot will move on this predetermined pattern, and at every point, you will compare the ground truth (world reference frame as fixed in the real world) to the virtual representation of the Duckiebot.

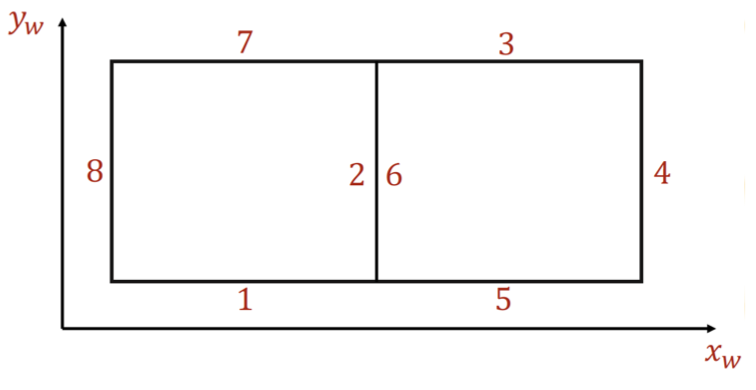

A proposed pattern is shown below, where every segment is 50 cm. The goal is to manually drive the Duckiebot (as good as possible) through each keypoint (corners), and then map the measured coordinates from the encoders to the real-world coordinates using least squares regression.

You should get two series of datapoints: - obtained from the known world frame, driving parallel to line on points. - which is obtained from the kinematics model of the Duckiebot as shown in the Mathematical representation section.

Odometry Calibration Exercise

✎Do the following:

-

Create a copy of the Duckietown ROS template or use your template from the previous exercise.

-

Run Keyboard control and manually control your Duckiebot.

-

Create a virtual representation of the Duckiebot’s position in the global frame (initialized at zero wherever you place it first).

-

Track the Duckiebot’s inferred position from the encoders, and contrast it with the ground truth.

-

Perform Least Squares to determine model parameters .

-

Update configuration parameters using

rosparam set. For the radius, take the average between the two found values. -

Take a video of your Duckiebot moving on a single line trajectory, and upload a rosbag containing the measured , , and values and their timestamp.

Deliverable 1: Screenshot of the updated /data/config/calibrations/kinematics/HOSTNAME.yaml file.

Deliverable 2: Video of your Duckiebot following a defined trajectory (0,0) to (0,X). And a rosbag containing the , , and values corresponding to your video.